Introduction

In the 2025 Australian federal election, artificial intelligence (AI) is quietly becoming a powerful force in the shaping and dissemination of political messages. AI has moved beyond back-end tasks such as data analysis, but is beginning to write campaign ads, generate memes, and even craft complete narratives.

(Image: Australian Federal Election.)

The Liberal Party’s recent release of Australia’s first fully AI-generated advertisement has sparked heated discussions, with an alien as the character in a video that warns people of rising fuel taxes. It’s certainly an innovation, applying AI to politics, but at the same time regulation in Australia remains stagnant. When voters see this AI-generated content while browsing social media, it is often difficult to distinguish the truth of the information, and it becomes easier for AI to manipulate the course of public opinion. The line between human and AI-generated content is becoming increasingly blurred. We have to think: is Australia ready to regulate the impact of AI in democracy?

The Liberal Party’s AI Ad and Its Implications

(Image: Screenshot of the AI-generated promotion video.)

In April 2025, the Liberal Party released an AI-generated political advertisement for the political election, in which an AI-generated alien character issued a warning about the proposed fuel tax. This advertisement was created by generation tools such as Midjourney, Sora and Runway, and quickly went viral on platforms like TikTok and X (formerly Twitter). This is the first political advertisement in Australia that is entirely generated by AI. Some people appreciate its creativity, but more critical voices point out that it may mislead voters and lack of content verification.

Ethical Risks and Regulatory Silence in Australia

(Image: Australian Electoral Commission.)

On one hand, although the political content generated by AI has promoted voter participation and brought innovative means, on the other hand, it is also accompanied by worrying problems. For instance, the spread of false and inaccurate information. Because the use of AI enables campaign teams to release personalized information on a large scale, it also significantly reduces the threshold for creating misleading or emotionally manipulative content. It is likely to lead to some ethical and legal challenges. Despite the increasing emergence of these risks, the Australian Election Commission (AEC) has yet to introduce any formal regulations specifically targeting the use of AI in political campaigns. At present, the political advertising guidelines of the AEC only cover the common requirements of “authorized communication” and “authenticity and reliability” (AEC, 2025) to maintain the conduct of the election. Meanwhile, the Australian Institute for Strategic Policy (ASPI) stated in its 2025 report that political actors in Australia continue to exploit AI to manipulate public opinion, ranging from fabricating facts to distorting narratives. Moreover, it also reflects that Australia is not fully prepared to deal with the electoral impact of AI, lacking both forward-looking regulations and education on the public’s ability to identify AI content (ASPI, 2025).

Interview Expert Perspective: Why Governance Cannot Wait

To gain a deeper understanding of the related risks, I interviewed Mr. Lee, a master’s student in computer science at the University of Sydney. His view is that AI can generate highly emotionally manipulative content on a large scale, “but there are almost no effective restraint mechanisms”, and he warns that political parties are almost impossible to self-restraint. He believes that Australia lags far behind other democratic countries in adapting election regulations to generative AI. He called for prompt reforms, including mandating the attribution of AI content, demanding that platforms take responsibility, and enhancing the public’s ability to identify AI content. Just as she said, “This is not only a technical issue, but also a democratic one.” The full interview audio can be found at the link below.

Public Confusion and the Limits of AI Literacy

(Image: Public Concerns About AI-Generated Content.)

The public’s confusion about AI-generated content is becoming an increasingly serious problem. In fact, most people struggle to distinguish AI-generated content from real media. According to Adobe’s “Authenticity in the AI Age” report released in 2025, only 12% of Australians are confident in identifying political deepfake content, and 86% of them say that generative AI makes it more difficult to distinguish between true and false content (Adobe, 2025). Another study in 2024, named “As Good As A Coin Toss“, found that participants performed close to the accuracy rate of random guessing (Di Cooke) in distinguishing AI-generated images, audio and video from real content. Furthermore, a global study jointly released by KPMG and the University of Melbourne in 2025 shows that only 24% of Australians have received AI-related training, which is lower than the global average of 39%. This has led to a high level of concern among Australians about their relatively low AI literacy and weak ability to distinguish misleading information.

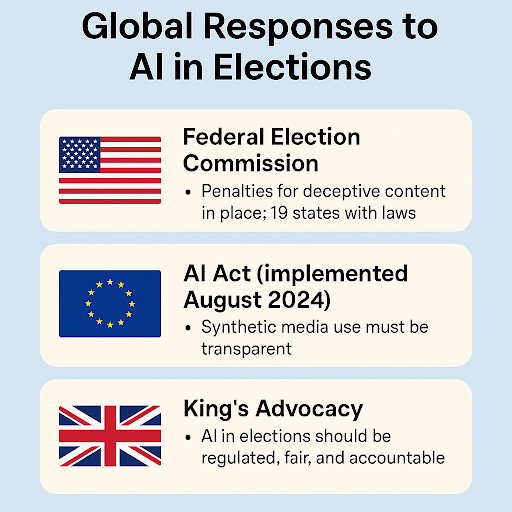

Global Responses: What Other Democracies Are Doing

(Image: Global Responses to AI in Election.)

Although Australia is still at a very weak stage in AI regulation, other democratic countries have begun to actively address this issue and seek solutions. In the United States, the explanatory rules issued by the Federal Election Commission explain how existing regulations apply to the use of artificial intelligence in political campaigns. It has also been confirmed that penalties for generating deceptive content are already included in existing regulations, and 19 states have passed relevant laws (FEC, 2024).

The European Union officially implemented the AI Act in August 2024, explicitly requiring that the use of synthetic media in political contexts must remain transparent (European Commission, 2024). The King of the United Kingdom also advocated that artificial intelligence must be within a regulatory framework and demonstrate fairness and accountability in election communication (the British Government, 2024). These international initiatives highlight the urgent need to protect democracy and safeguard people’s rights and interests, it is necessary to regulate and standardize the political content synthesized by AI, which is precisely an issue that Australia has yet to take seriously.

Proposed Solutions: A Multi-Layered Approach

(Image: Centre for Emerging Technology and Security)

According to research, dealing with AI-driven political manipulation requires multi-level solutions. In its 2024 report, the Centre for Emerging Technologies and Security (CETaS) in the UK pointed out that AI-generated false information amplifies harmful narratives and intensifies political polarization. Therefore, it must be addressed by strengthening digital literacy education and building a strong public broadcasting ecosystem (CETaS, 2024). First of all, the authorities should enforce mandatory disclosure regulations, requiring all campaign materials to clearly indicate that AI-generated content is distinct from that posted by humans. Secondly, social platforms must take responsibility and promptly identify and forcibly delete false content during the election period. Thirdly, the government should make long-term investments in civic education and digital literacy to enhance voters’ ability to distinguish the authenticity of political information. The 2024 presidential election in Taiwan also demonstrated a multifaceted model of response – a combination of legislation, fact-checking, and public outreach to effectively curb in-depth falsification and synthesized information (Thomson Foundation, 2024)

Conclusion

The influence of AI in the Australian federal election is growing increasingly significant. It is no longer just at the theoretical level but has also had an impact in reality. From synthetic videos to AI-generated political emojis, the speed at which AI manipulates is far exceeding the response capacity of current laws. If no prompt response measures are taken, public trust, fair elections and democratic participation in Australia will face serious threats. Other countries have taken action and Australia should no longer hesitate. The question is no longer whether AI will change elections, but whether democratic systems can keep up with The Times in a timely manner.

Be the first to comment